One model that many people have used for making sense of the multiple services in OpenStack is that of a series of layers, with the ‘compute starter kit’ projects forming the base. Jay Pipes recently wrote what may prove to be the canonical distillation (this post is an edited version of my response):

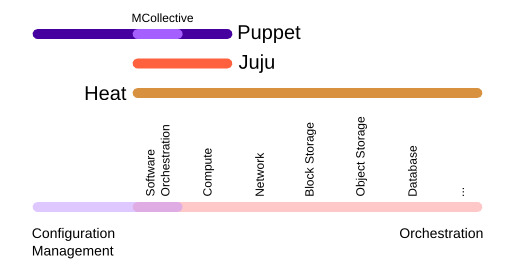

Nova, Neutron, Cinder, Keystone and Glance are a definitive lower level of an OpenStack deployment. They represent a set of required integrated services that supply the most basic infrastructure for datacenter resource management when deploying OpenStack. Depending on the particular use cases and workloads the OpenStack deployer wishes to promote, an additional layer of services provides workload orchestration and workflow management capabilities.

I am going to explain why this viewpoint is wrong, but first I want to acknowledge what is attractive about it (even to me). It contains a genuinely useful observation that leads to a real insight.

The insight is that whereas the installation instructions for something like Kubernetes usually contain an implicit assumption that you start with a working datacenter, the same is not true for OpenStack. OpenStack is the only open source project concentrating on the gap between a rack full of unconfigured equipment and somewhere that you could run a higher-level service like Kubernetes. We write the bit where the rubber meets the road, and if we do not there is nobody else to do it! There is an almost infinite variety of different applications and they will all need different parts of the higher layers, but ultimately they must be reified in a physical data center and when they are OpenStack will be there: that is the core of what we are building.

It is only the tiniest of leaps from seeing that idea as attractive, useful, and genuinely insightful to believing it is correct. I cannot really blame anybody who made that leap. But an abyss awaits them nonetheless.

Back in the 1960s and early 1970s there was this idea about Artificial Intelligence: even a 2 year old human can (for example) recognise images with a high degree of accuracy, but doing (say) calculus is extremely hard in comparison and takes years of training. But computers can already do calculus! Ergo, we have solved the hardest part already and building the rest out of that will be trivial, AGI is just around the corner, and so on. The popularity of this idea arguably helped created the AI bubble, and the inevitable collision with the reality of its fundamental wrongness led to the AI Winter. Because, in fact, though you can build logic out of many layers of heuristics (as human brains do), it absolutely does not follow that it is trivial to build other things that also require layers of heuristics out of some basic logic building blocks. (In contrast, the AI technology of the present, which is showing more promise, is called Deep Learning because it consists literally of multiple layers of heuristics. It is also still considerably worse at it than any 2 year old human.)

I see the problem with the OpenStack-as-layers model as being analogous. (I am not suggesting there will be a full-on OpenStack Winter, but we are well past the Peak of Inflated Expectations.) With Nova, Keystone, Glance, Neutron, and Cinder you can build a pretty good Virtual Private Server hosting service. But it is a mistake to think that cloud is something you get by layering stuff on top of VPS hosting. It is relatively easy to build a VPS host on top of a cloud, just like teaching someone calculus. But it is enormously difficult to build a cloud on top of a VPS host (it would involve a lot of expensive layers of abstraction, comparable to building artificial neurons in software).

That is all very abstract, so let me bring in a concrete example. Kubernetes is event-driven at a very fundamental level: when a pod or a whole kubelet dies, Kubernetes gets a notification immediately and that prompts it to reschedule the workload. In contrast, Nova/Cinder/&c. are a black hole. You cannot even build a sane dashboard for your VPS—let alone cloud-style orchestration—over them, because it will have to spend all of its time polling the APIs to find out if anything happened. There is an entire separate project, that almost no deployments include, basically dedicated to spelunking in the compute node without Nova’s knowledge to try to surface this information. It is no criticism of the team in question, who are doing something that desperately needs doing in the only way that is really open to them, but the result is an embarrassingly bad architecture for OpenStack as a whole.

So yes, it is sometimes helpful to think about the fact that there is a group of components that own the low level interaction with outside systems (hardware, or IdM in the case of Keystone), and that almost every application will end up touching those directly or indirectly, while each using different subsets of the other functionality… but only in the awareness that those things also need to be built from the ground up as interlocking pieces in a larger puzzle.

Saying that the compute starter kit projects represent a ‘definitive lower level of an OpenStack deployment’ invites the listener to ignore the bigger picture; to imagine that if those lower level services just take care of their own needs then everything else can just build on top. That is a mistake, unless you believe that OpenStack needs only to provide enough building blocks to build VPS hosting out of, because support for all of those higher-level things does not just fall out for free. You have to consciously work at it.

Imagine for a moment that, knowing everything we know now, we had designed OpenStack around a system of event sources and sinks that are reliable in the face of hardware failures and network partitions, with components connecting into it to provide services to the user and to each other. That is what Kubernetes did. That is the key to its success. We need to enable something similar, because OpenStack is still necessary even in a world where Kubernetes exists.

One reason OpenStack is still necessary is the one we started with above: something needs to own the interaction with the underlying physical infrastructure, and the alternatives are all proprietary. Another place where OpenStack can provide value is by being less opinionated and allowing application developers to choose how the event sources and sinks are connected together. That means that users should, for example, be able to customise their own failover behaviour in ‘userspace’ rather than rely on the one-size-fits-all approach of handling everything automatically inside Kubernetes. This is theoretically an advantage of having separate projects instead of a monolithic design—though the fact that the various agents running on a compute node are more tightly bound to their corresponding services than to each other has the potential to offer the worst of both worlds.

All of these thoughts will be used as fodder for writing a technical vision statement for OpenStack. My hope is that will help align our focus as a community so that we can work together in the same direction instead of at cross-purposes. Along the way, we will need many discussions like this one to get to the root of what can be some quite subtle differences in interpretation that nevertheless lead to divergent assumptions. Please join in if you see one happening!